ABSTRACT

For autonomous navigation, robots and vehicles must have accurate estimates of their current state (i.e. location and orientation) within an inertial coordinate frame. If a map is given a priori, the process of determining this state is known as localization. When operating in the outdoors, localization is often assumed to be a solved problem when GPS measurements are available. However, in urban canyons and other areas where GPS accuracy is decreased, additional techniques with other sensors and filtering are required. This thesis aims to provide one such technique based on monocular vision.

First, the system requires a map be generated, which consists of a set of geo-referenced video images. This map is generated offline before autonomous navigation is required. When an autonomous vehicle is later deployed, it will be equipped with an on-board camera. As the vehicle moves and obtains images, it will be able to compare its current images with images from the pre-generated map.

To conduct this comparison, a method known as image correlation, developed at Johns Hopkins University by Rob Thompson, Daniel Gianola and Christopher Eberl, is used. The output from this comparison is used within a particle filter to provide an estimate of vehicle location. Experimentation demonstrates the particle filter’s ability to successfully localize the vehicle within a small map that consists of a short section of road. Notably, no initial assumption of vehicle location within this map is required.

BACKGROUND

Localization is the act of determining the location of an (autonomous) agent within a map given a priori. In almost every type of mobile robot system localization is necessary. Regardless of the size of the robot, the system will most likely need to know where it or something else is. Various aspects to robotics count on this ability, including but not limited to, robotic navigation. Success in navigation requires success at the four building blocks of navigation.

PROBLEM DEFINITION & SOLUTION

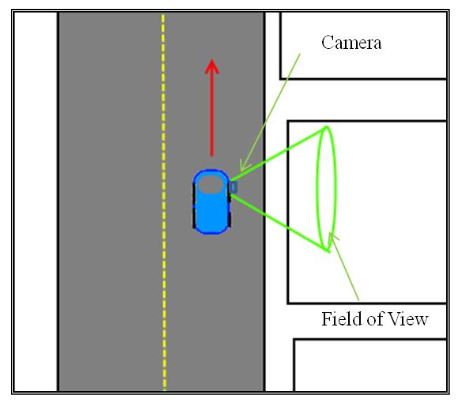

Figure 3: Top down view of camera mounting configuration

The first step in constructing a map is obtaining a video Vm. Video is recorded while the vehicle is driven down a length of road. Camera configuration should capture the view outside of the passenger side window as shown in Figure 3 below.

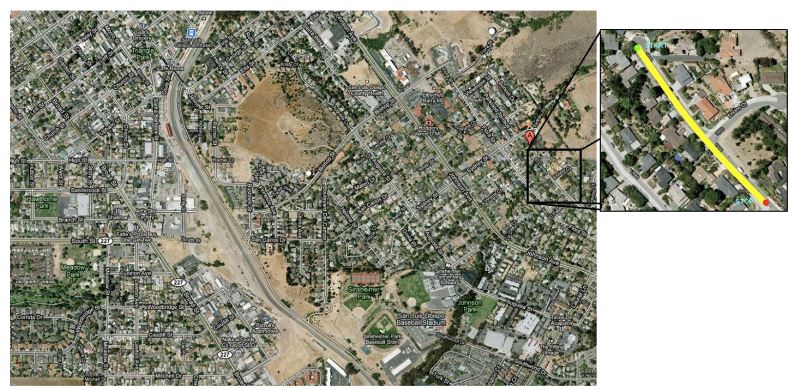

Figure 1: City Vs. Street Map

The proposed solution to this problem can be broken into two basic components: map creation and localization. This section sets the scene for building the low level detail upon how each one of the tasks should be accomplished. The implementation chapter on the other hand, goes into the technical details of the experiment.

IMPLEMENTATION

Figure 5: Camera Mounted

A Logitech Quick Cam Pro 5000 was used for obtaining all video footage. At the time of purchase, it had very good reviews and was one of the best web cameras for capturing fast motion without blurring images. The Camera was attached using double sided tape to the passenger side window of a 1997 Honda CR-V (Figure 5).

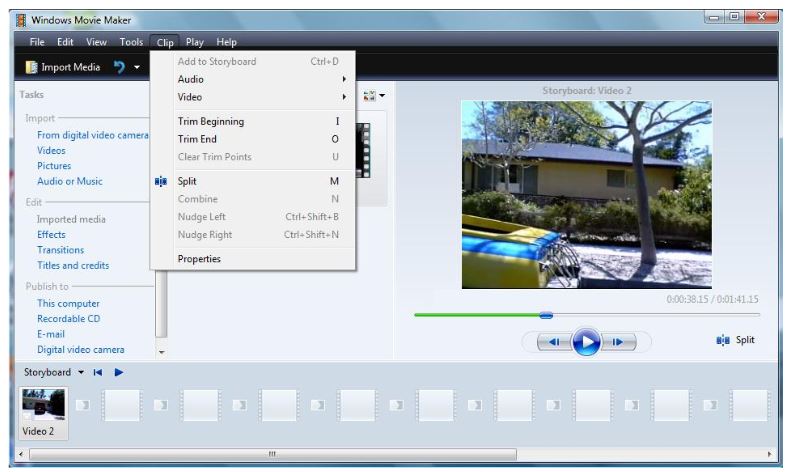

Figure 7: Trim Tool

Initially the video was opened and edited using Microsoft Windows Movie Maker from Windows Vista (not XP or Windows 7). This specific version was used because of its unusual stability while processing visual effects and for its ability to use both included and custom filters. These filters can seamlessly be applied to videos, and proved helpful in section 4.1.3. Using the trim function in Movie Maker (shown in figure 7), the video was trimmed to only include the visual data from the desired region of the experiment.

RESULTS

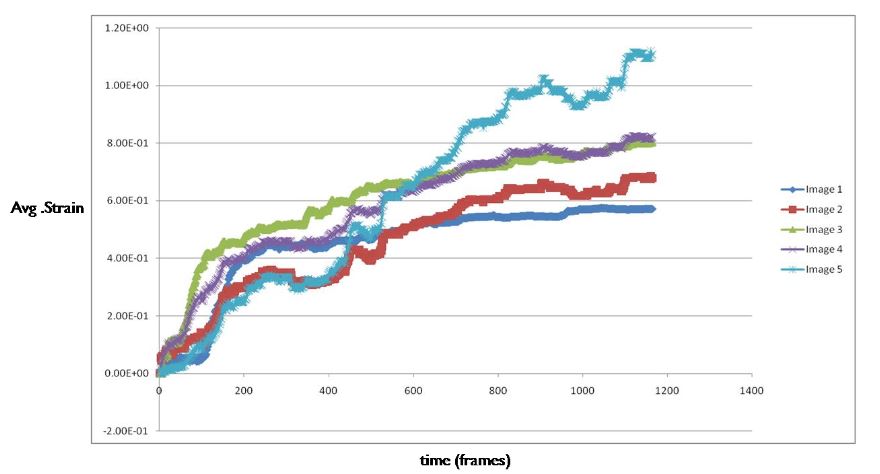

Figure 24: Image Correlation Strain

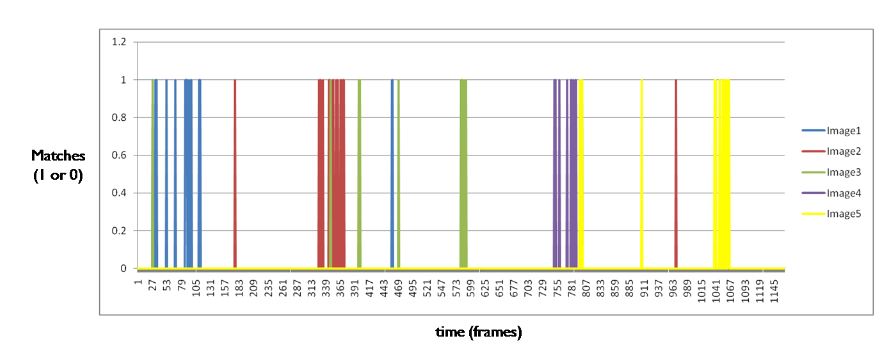

Figure 25: K(t) results for each geo-located image

Figure 24 above represents strain (y-axis) over frames (x-axis). While some of the plateaus are easy to distinguish in the above figure (Figure 24), there is enough variance and random error to warrant the use of the data processing described in section 4.2.7. Image matching described in section 4.2.7 produced matches shown in figure 25.

CONCLUSION

It can be seen in the data shown in chapter 5 that the particular configuration of technology described in this thesis is a viable means of accomplishing autonomous localization. The image correlations technology successfully finds matches between any two images. The particle filter not only shows how one can localize a vehicles position from a known starting point, but demonstrates how the particle filter can be implemented to satisfy the Kidnapped Robot scenario, where the initial position is unknown.

Experiments were a successful in that the position error decreases towards zero over time. The results demonstrate the ability to determine within X m where the vehicle was as a function of time while the car was in motion forth is experiment. This experiment has shown the potential for this system to be scaled up and to potentially aid in addressing common issues with autonomous outdoor localization. While not currently implemented in real time, it is expected that real time localization is possible if one or more changes are made.

It is believed that current hardware couldn’t process the data fast enough to perform the necessary calculations in real-time. With fast enough hardware, and perhaps changes to the correlation and other processing functions, there exists the possibility that certain parts of the implementation can be changed or perhaps upgraded in multiple ways to accomplish this future goal. Additional ideas for further improvements are contained within the next chapter of this thesis.

Source: California Polytechnic State University

Author: Matthew P. Schlachtman