ABSTRACT

With the prevalence of computing, many workers today are confined to desk within an office. By sitting in these positions for long periods of time, workers are prone to develop one of many musculoskeletal disorders (MSDs), such as carpal tunnel syndrome. In order to prevent MSDs in the long term, workers must employ good sitting habits. One promising method to ensure good workplace posture is through camera monitoring. To date, camera systems have been used in determining posture in a clean environment. However, an occluded and cluttered background, which is typical in an office setting, imposes a great challenge for a computer vision system to detect desired objects.

In this thesis, we design and propose components that assess good posture using information gathered from a Microsoft Kinect camera. To do so, we generate a data set of posture captures to test and train, applying crowd-sourced voting to determine ratings for a subset of these captures. Leveraging this data set, we apply machine learning to develop a classification tool. Finally, we explore and compare the usage of depth information in conjunction with a traditional RGB sensor array and present novel implementations of a wrist locating method.

BACKGROUND

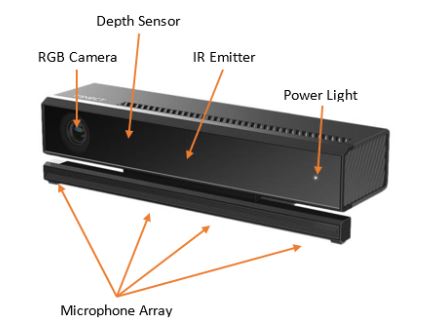

Figure 2.1: Microsoft Kinect v2 camera

To calculate depth information, the Kinect camera projects infrared (IR) light into a space through an array of three emitters located at the center of the Kinect. An IR receiving array, labeled as a depth sensor in Figure 2.1, then collects and examines the emitted data.

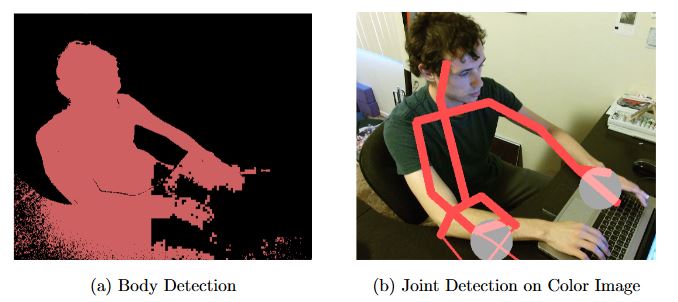

Figure 2.6: Unsuccessful body detection and resulting joint detection over-lay

The case of occlusion provides a shortcoming for the joint detection algorithm. In Figure 2.6, we capture a person sitting in front of a computer, partially occluded by a desk at an angle. In body extraction, the Kinect incorrectly interprets portions of the desk as being part of the subject.

RELATED WORKS

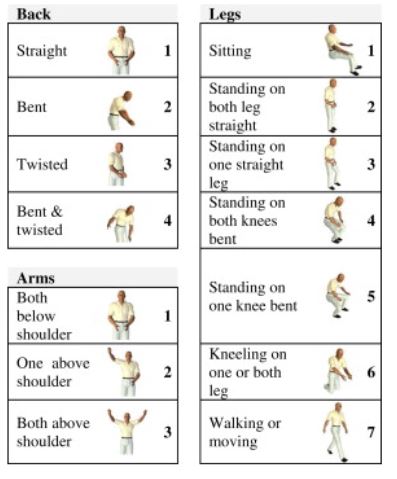

Figure 3.1: OWAS risk codes for back, shoulders, and legs

In a paper published in 2013, “Using Kinect TM sensor in observational methods for assessing posture at work”, the team develops an assessment system which categorizes posture risks levels. Jose Antonio Diego-Mas and Jorge Alcaide-Marzel of Universitat Politecnica de Valencia, Camino de Vera, Spain, develop this system to follow ergonomic rules specified by the OWAS method. Using this method, they classify body posture under distinct four-digit codes based on back, shoulders, legs, and load (See Figure 3.1 for reference codes).

RATED POSTURE DATASET

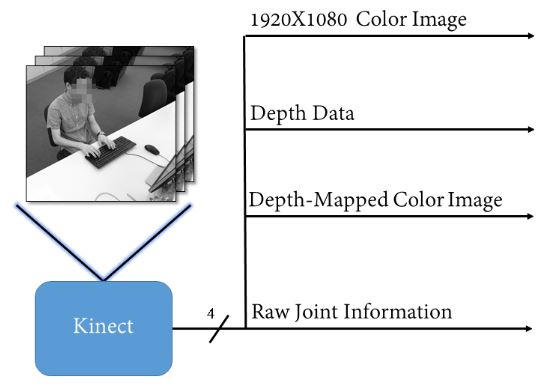

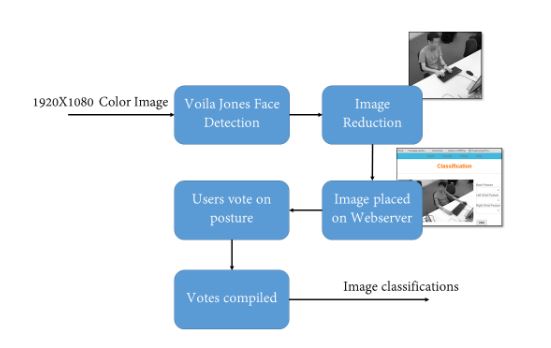

Figure 4.1: Initial workflow from Microsoft Kinect capture

In Figure 4.1, we describe the details captured from the Kinect captures on subjects. For each recorded subject, we capture static captures once every 5-10 seconds containing depth, mapped, and color images. We collect joint information for all joints as a continuous stream of data, with a hardware-limted resolution of up to thirty captures/second.

Figure 4.2: Steps to generate classifications for image frames

In order to determine posture classifications we employ crowd-sourcing. We build a system that uses a webserver, queries users to rate postures on a scale, and records the result of a set of classified training data. Figure 4.2 shows this process. In the following sections, we describe each steps in detail.

BACK POSTURE CLASSIFICATION

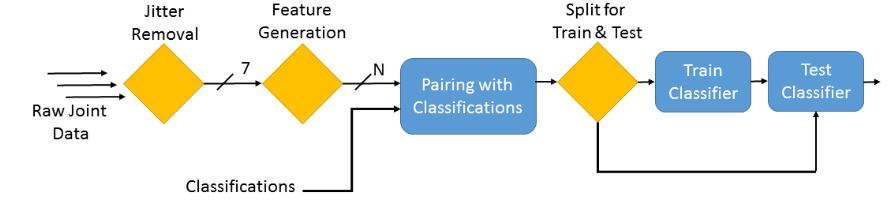

Figure 5.1: Overview of back classification test design

In each stage of Figure 5.1, we apply multiple techniques and compare their effectiveness. Specifically, during the jitter removal and training classifier stages, we employ several distinct methods. In the following sections, we describe methodology presented for each stage.

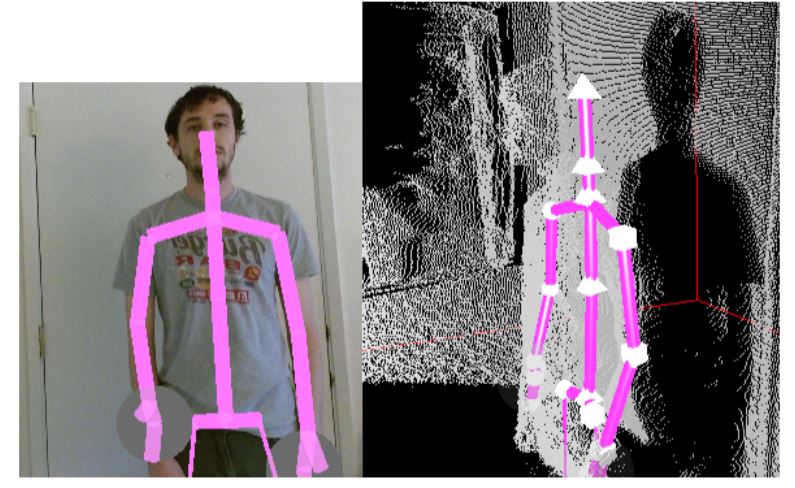

Figure 5.4: Sample captures on subject with good posture

In Figure 5.4 and Figure 5.5, we provide examples of joint captures for a subject exhibiting good and bad posture, respectively. In both images, we observe the two dimensional front-facing representation, and a three dimensional angled view. For the first subject, the user stands upright, with a straight neck alignment.

TOWARDS WRIST LOCATION

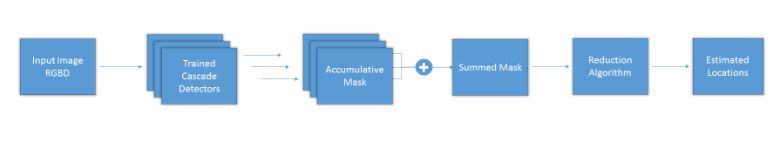

Figure 6.1: Overall flowchart of testing setup

Figure 6.1 portrays the steps followed in testing a hand-wrist locating scheme. To develop this system we perform a series of iterative improvements, where the goal is to maximize wrist detections and minimize false positive results. Within our iterative improvement algorthim we employ cascade object detection, provided as a tool with Matlab’s Computer Vision System Toolbox.

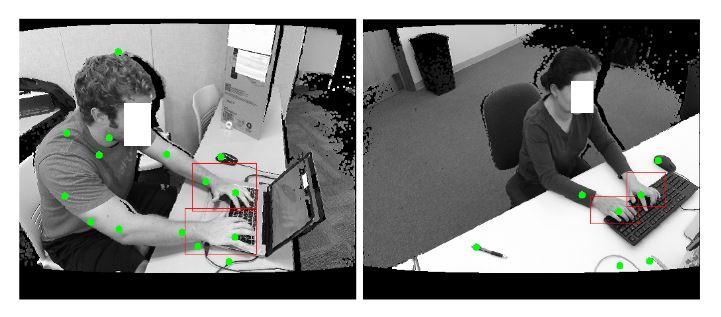

Figure 6.3: Two results of cascade detection using simple detection

Figure 6.3, we provide two images of subjects which the cascade detection discovers more hands than present. To be more precise, we observe nine hands in the first image, denoted by green dots. Though we predic two true-positive points within the hand bounding-boxes, we also see seven false positives. On the second, we detect four hands-two being false positives.

CONCLUSION

In this thesis, we sought to work towards a means of preventing office workers from suffering from posture-induced MSDs. We set out to design components behind a system to detect bad posture, fulfilling the following objectives:

1. Easy to set up in a cubicle or work environment

2. Physically non-invasive so that the worker continue working free from distractions

3. Allow for some form of feedback on how to correct bad posture

4. Allow for incorporation of posture classification on multiple joints

To fulfill these objectives, we generated a data-set containing 1,186 depth images, 1136 color images, and 1,122 depth-mapped color images from 17 subjects totalling 48,569 frames of capture joint-tracking data. We then produced a rated data set consisting of 11,58 ratings across back, left wrist, and right wrist. Using the classification and joint-tracking data we trained several models on back posture, including KNN, Neural Network, Random Forest, and SVM, each with four separate data input types and three controls.

In total, we trained 242 distinct configurations of machine learning classifiers. Between these processes we achieved at the highest a 97% sensitivity, a 70% specificity, and a 0.5 kappa score. Our highest scoring classifiers were a bootstrap control SVM with a raw data input type, and a bootstrap neural network of a median filter of span 10.

FUTURE WORK

In this section we outline some areas of work following up the research presented here. To highlight different facets of the future work, we divide it into multiple topics, we first describe direct enhancements to the work presented within our contributions, then go into general future work on the topic of posture biofeedback.

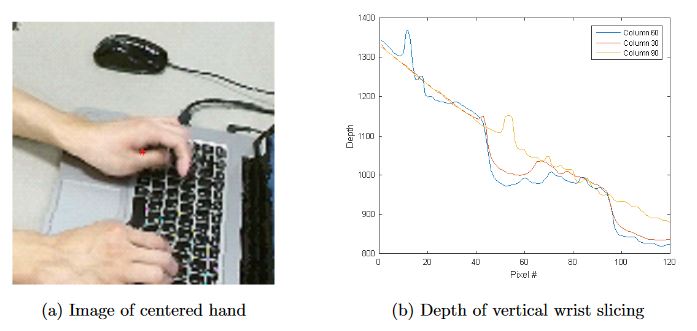

Figure 8.1: Sample for growing wrist region

Within this thesis we presented a method to perform wrist locating. Based on the data we present, it should be possible to use these points and grow a region containing hand information. Both color and depth information can be leveraged: using color information we can extract wrists and arms. Using depth information we can perform a threshold to separate left and right arms. We can also use depth information in separating arms from the desk. In Figure 8.1, we present some preliminary results of performing a vertical depth slice of hand regions in a test region.

Source: California Polytechnic State University

Author: Matthew Crussell

>> Matlab Projects Fingerprint Recognition and Face detection for Final Year Students

>> 200+ Matlab Projects based on Control System for Final Year Students