ABSTRACT

The parallelized algorithm for hyperspectral biometrics uses the processing power of a GPU (Graphical Processing Unit) to compare hyperspectral images of people’s faces. The feature extraction algorithm first retrieves uniquely identifiable features from raw hyperspectral data from 64 bands and creates both a database and individual target files.

Using these files, the comparison algorithm written in CUDA C compares a given target against the database and returns the top five matches, their calculated distance from the target, and their security clearance level. A wireless door locking mechanism can be engaged to simulate unlocking and re-‐locking any of four doors based on the given security rating.

The feature extraction algorithm is accurate to within 2% of actual location and the comparison algorithm returns the target in the top 5 matches 65% of the time. The wireless door locking assembly works as expected although it occasionally has packet corruption errors in its communication. Improvements can also be made in the range of data that the feature extraction algorithm accepts and in the accuracy and speed of the comparison algorithm.

DESIGN

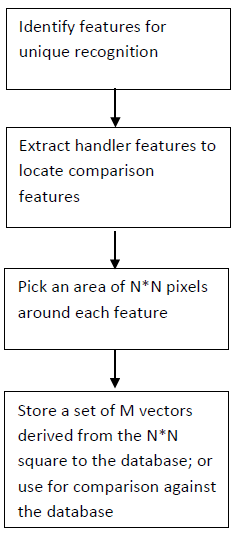

Figure 2 – Feature Extraction Algorithm Flowchart.

Figure 2 shows the flowchart design for this algorithm. The data obtained from a research project at Carnegie Mellon University contains 46 subjects. Out of these, 10 had one session only, 10 were used for training purposes, and 26 were used for testing the final algorithm. Each session information contained image files built from radiance information for each of 65 bands of wavelengths ranging from 450 nm to 1100 nm.

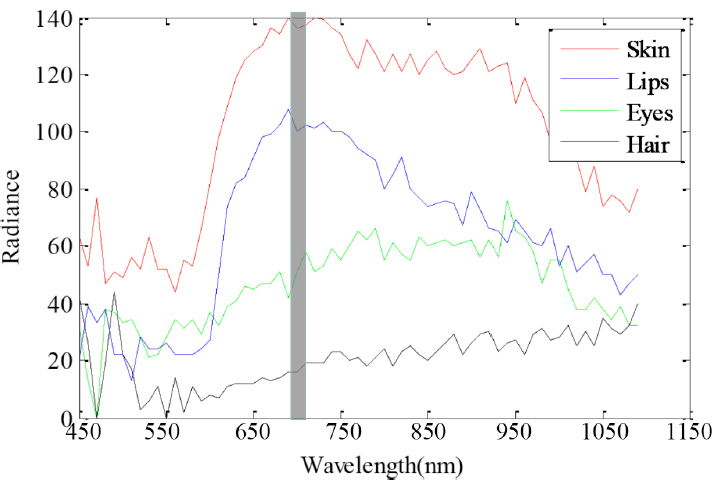

Figure 3 - Chart of the Radiance versus Wavelength for the handler features and skin tissue of subject 2.

The next step was to extract the positions of these comparison features. To achieve this, we realized that it was more appropriate to extract the positions of the eyes, lips and hair, because of the gradient between the radiance information for these attributes and the skin tissue, as demonstrated in Figure 3. These ‘handle features’ are then used to geographically map out the face of the subject and extract the positions of the comparison features (cheeks, lips, forehead and hair). The highlighted band in Figure 3 was used to perform the extraction of these features.

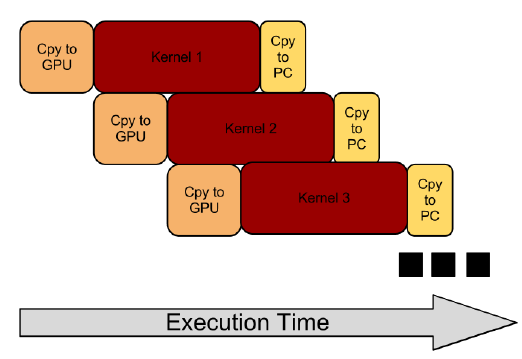

Figure 7 ‐ Diagram of the concurrent kernel and memory copy pattern.

Without concurrency, each row in the Figure 7 would have to be placed end to end in one row, resulting in a much longer processing runtime. The current implementation only uses five instances of the same kernel, since this should be more than enough to saturate the memory copy bandwidth while maximizing the use of GPU processing power although Kepler can handle up sixteen concurrent kernels (same as older Fermi Architecture).

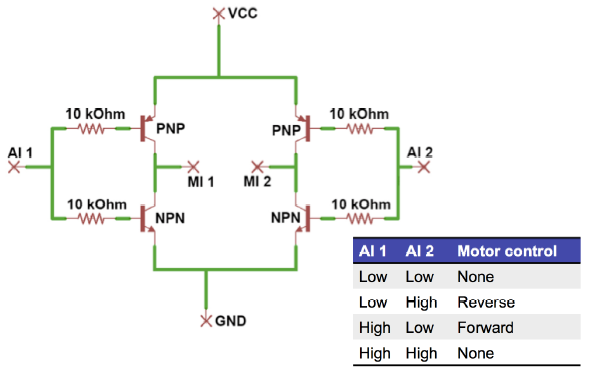

Figure 11 ‐ Single H-bridge circuit and truth table.

The Figure 11 design implements an H-‐bridge using four general purpose BJTs (two NPN, 2PNP), and four 10kΩ resistors. AI 1 and AI 2 represent inputs from the Arduino logic pins; MI 1 and MI 2 are the two leads of the motor. These parts were selected for our prototype model since they had low cost and were easily accessible. The BJTs were used over CMOS transistors since they are more tolerant of heating and static, making them easier to prototype with.

DESIGN VERIFICATION

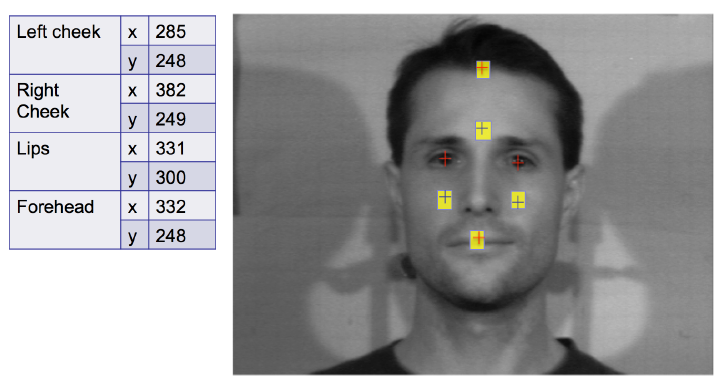

Figure 12 ‐ Sample results obtained from the feature extraction algorithm.

The unsuccessful runs were due to some inconsistencies in the data and improvements can be made to make the algorithm more robust and improve its capability to resolve such conflicts. The database was built for demo with 26 subjects, using the information from the pixels in the yellow squares in Figure 12, and a variable number of randomly generated entries (208-1000) for parallel algorithm benchmarking.

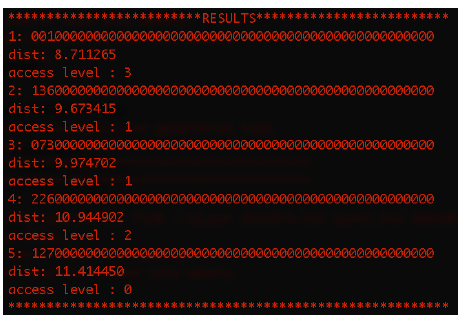

Figure 13 - Program output screenshot for subject 001.

As can be seen from Figure 13, the algorithm output is in an acceptable format. First, the name field of the match is printed (50 characters that were numbers 000‐234 followed by forty‐seven zeros for the test preformed). The next line contains the distance of the subject followed by the access level associated with that subject.

CONCLUSION

We have demonstrated the potential of using hyperspectral information for facial recognition using GPUs to perform comparisons with large databases. Most of the requirements were met. The Feature extraction module extracted the correct locations of the features for 34/36 subjects over multiple sessions (within the error range) and constructed a database well suited for comparison. The comparison algorithm was quite successful and exceeded requirements.

The target was in the top five for 65% of the subjects and the top match for 20% of the subjects. There were significant speed-‐ups obtained for larger databases proving the potential of GPU processing to perform the computations required for hyperspectral facial recognition. The wireless door locking mechanism was fairly successful except for the packet loss issue. The H-‐bridge circuits on the PCB and the Arduino code performed up to specification.

Various steps were taken to maintain the integrity of our project and stay consistent with the IEEE Code of Ethics. Our project followed the first pledge of IEEE ethics by creating a technological solution that can increase public safety in a variety of applications (crime fighting, counter-‐terrorism, etc.). Our project embodies the third pledge of IEEE ethics by clearly stating the limits and range of our end result, as well as clearly defining the scope of available data and making claims accordingly. To maintain academic honesty and integrity of our results, the data set was divided into two-demo and training. The demo database was never tested on during the development of the algorithm, and the training database was not used for our final presentation of results.

Source: University of Illinois

Authors: Christopher Baker | Timothée Bouhour | Akshay Malik

>> More Wireless Projects using Arduino for Engineering Students

>> More Wireless Biomedical Projects for Engineering Students