ABSTRACT

Augmented reality, augmented television and second screen are cutting edge technologies that provide end users extra and enhanced information related to certain events in real time. This enriched information helps users better understand such events, at the same time providing a more satisfactory experience. In the present paper, we apply this main idea to human–robot interaction (HRI), to how users and robots interchange information.

The ultimate goal of this paper is to improve the quality of HRI, developing a new dialog manager system that incorporates enriched information from the semantic web. This work presents the augmented robotic dialog system (ARDS), which uses natural language understanding mechanisms to provide two features: (i) a non-grammar multimodal input (verbal and/or written) text; and (ii) a contextualization of the information conveyed in the interaction.

This contextualization is achieved by information enrichment techniques that link the extracted information from the dialog with extra information about the world available in semantic knowledge bases. This enriched or contextualized information (information enrichment, semantic enhancement or contextualized information are used interchangeably in the rest of this paper) offers many possibilities in terms of HRI.

For instance, it can enhance the robot’s pro-activeness during a human–robot dialog (the enriched information can be used to propose new topics during the dialog, while ensuring a coherent interaction). Another possibility is to display additional multimedia content related to the enriched information on a visual device. This paper describes the ARDS and shows a proof of concept of its applications.

SYSTEM OVERVIEW

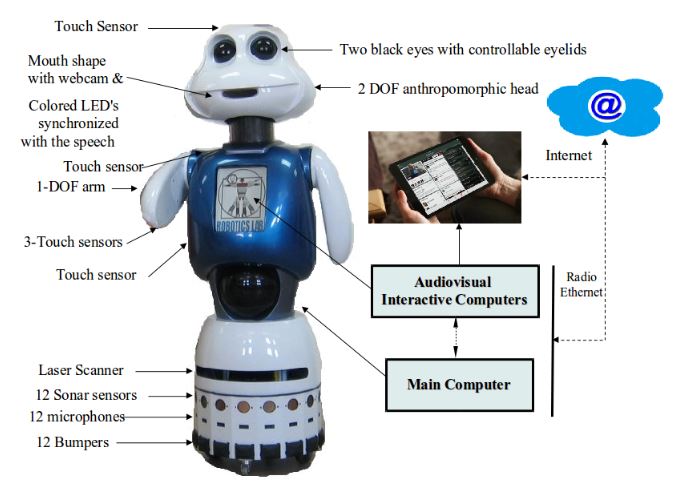

Figure 1. The social robot Maggie with an external interactive tablet

Figure 1 shows the social robot Maggie with some details of the hardware and an external tactile tablet that is accessible to the robot. Maggie, as a robotic research platform, has been described with more detail in other papers, and here, we shall focus on those components relevant to the present paper.

NATURAL LANGUAGE LEVELS IN THE SYSTEM

The research presented in this paper comprises several areas: dialog manager systems (DMS), automatic speech recognition (ASR), optical character recognition (OCR), information extraction (IEx) and information enrichment (IEn). We have been inspired by several papers from these areas and have taken available tools for doing a whole integration in a real interactive social robot.

THE ROBOTICS DIALOG SYSTEM: THE FRAMEWORK FOR THE AUGMENTED ROBOT DIALOG SYSTEM

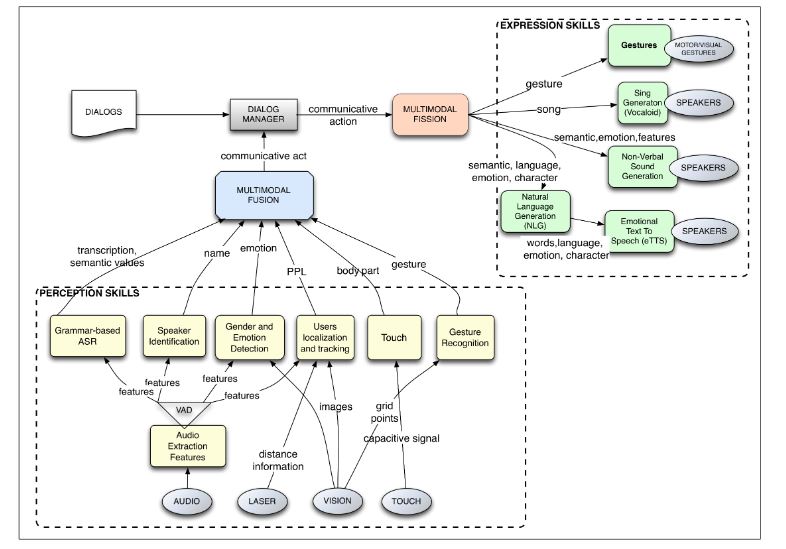

Figure 2. Sketch of the main components of the robotic dialog system: perception skills

Here, we briefly describe the RDS to facilitate understanding of the rest of this paper. More details can be found. The RDS is intended to manage the interaction between a robot and one or more users. It is integrated within the robot’s control architecture, which manages all of the tasks and processes, which include navigation, user interaction and other robot skills. See Figure 2.

THE AUGMENTED ROBOTIC DIALOG SYSTEM

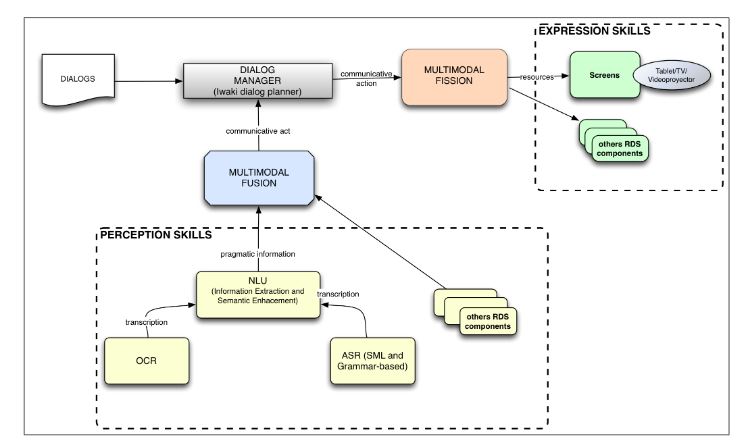

Figure 3. Sketch of the augmented robotic dialog system (ARDS)

Figure 3 depicts the different components of the ARDS. The new input functionality is presented as the following input modules: OCR and non-grammar ASR. The other new functionality is presented in the NLU module. This component takes the information extracted from the OCR and ASR modules and extracts some of its semantic content.

Figure 5. Optical character recognition in real time

The technique of OCR has been extensively applied. However, only a few researchers have worked on real-time OCR. Milyaev et al recognized text from a real-time video (see Figure 5). The text can be written on different surfaces, and its size, position and orientation can vary.

PROOF OF CONCEPT: HRI AND ARDS

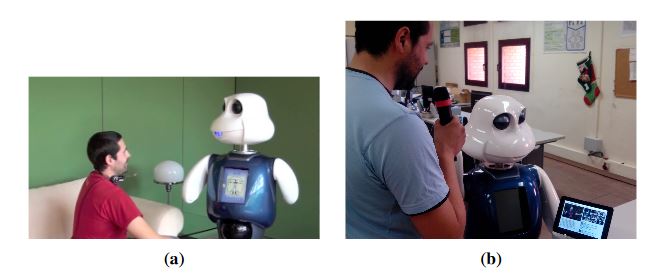

Figure 8. Human–robot dialog where the robot is showing information on a tablet about the main entities extracted from the conversation

Figure 8 shows the user and the robot interacting within the proof of concept in two different scenarios: in a common natural environment, a living room, and at the laboratory. Note how the robot is able to gaze at the external screen where the enriched information is shown, so a triangular interaction between the screen, the robot and the user is generated.

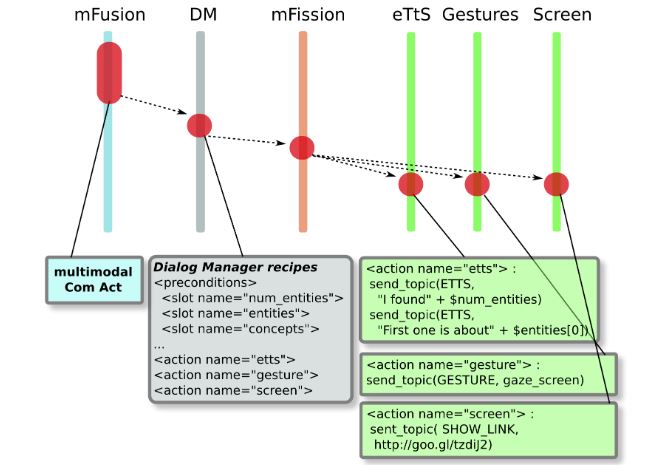

Figure 10. Information flow from multimodal fusion to multimodal expression

Figure 10 shows the information flow from the perceived communication act (CA) to the robot’s multimodal expression. The fusion module sends the CA to the DM, which fills some of the information slots defined in the active dialog. These slots include what the information extraction module has detected: the number and names of the main entities and concepts and also the IEn data: URLs related to such entities.

CONCLUSIONS AND FUTURE RESEARCH

This paper has described the augmented robotic dialog system and its implementation in the social robot Maggie. Other similar research has already used natural language processing techniques, IEx feature, and IEn to improve the user experience, but none in a unique, complete system, nor in an interactive social robot. One of the main advantages of the ARDS is the possibility of communicating with the robot both with or without a grammar, that is using natural language.

Grammars are formed by rules that delimit the acceptable sentences for a dialog. The use of grammars allows the dialog system to achieve a high recognition accuracy. On the other hand, grammars limit considerably the interpretable input language. The use of a grammarless ASR in conjunction with the IEx modules enables interacting with the robot using natural language. These modules process the user utterances and extract their semantic information from any natural input utterance.

Later, this information is used in the dialog, showing related multimedia content. In addition, the ARDS facilitates maintaining a coherent dialog. The main topics of the dialog can be extracted; thus, the robot can keep talking about the same matter or detect when the topic changes. Encouraging pro-active human–robot dialogs is also a key point. The IEn module provides new related information about what the user is talking about. The robot can take the initiative and introduce this new information in the dialog, driving the dialog to new areas while coherence is kept.

Although it could be also applied to other areas, it is important to remember that the ARDS has been designed for social robots, which are robots intended mainly for HRI. For these robots, it is important to engage people in the interaction loop. Considering the strong points already mentioned (natural language understanding, coherence and proactive dialogs), the ARDS tries to improve this engagement.

Moreover, the sentiment extracted from the user’s message can help to improve engagement, as well. It can be used to detect when the user is losing interest in the conversation, so the robot can try to recover in this situation. Besides, the robot’s expressiveness is complemented with unconventional output modes, such as a tablet, which can make the result more appealing to users.

Source: Vienna University

Authors: Fernando Alonso-Martin | Alvaro Castro-Gonzalez | Francisco Javier Fernandez de Gorostiza Luengo | Miguel Angel Salichs