ABSTRACT

The reconstruction of the physical environment using a depth sensor involves data-intensive computations which are difficult to implement on mobile systems (e.g., tracking and aligning the position of the sensor with the depth maps). In this paper, we present two practical experimental setups for scanning and reconstructing real objects employing low-price, off-the-shelf embedded components and open-source libraries. As a test case, we scan and reconstruct a 23 m high statue using an octocopter without employing external hardware.

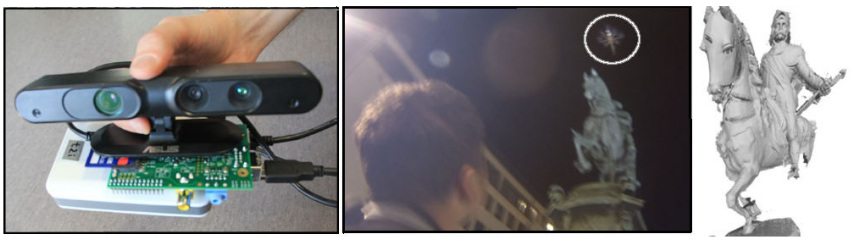

Figure 1: Reconstruction of a statue using a multicopter: (left) handheld ASUS Xtion depth sensor – Raspberry Pi – battery system; (middle) remotely controlled multicopter while recording depth maps; (right) reconstructed 3D model of the statue.

RELATED WORK

We review applications of real-time reconstruction performed on the ground indoors or outdoors, and research employing multicopters where SLAM is used in navigation and environment mapping.

Low-cost depth sensors support new types of applications based on real-time reconstruction of the physical environment. Google Tango [tan] attempts to give mobile devices an understanding of space and motion by integrating depth sensors and combining it with accelerometer and gyroscope data of mobile phones. Chow et. al propose a mobile mapping system for indoor environments that integrate IMU, depth maps from two Kinects, and range images from a LiDAR system.

EXPERIMENTS

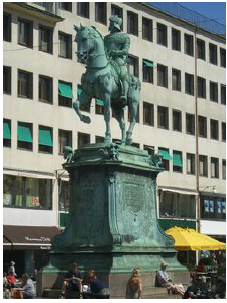

Figure 2: The scanned statue.

We present two setups for mid-air reconstruction to obtain range images from depth sensors using an octocopter. Both the setups are based on off-the-shelf hardware components and open-source software. As a test case, we reconstructed a sculpture which is 23.3 meters high and, as such, it is impractical to scan without an aerial device (Fig. 2).

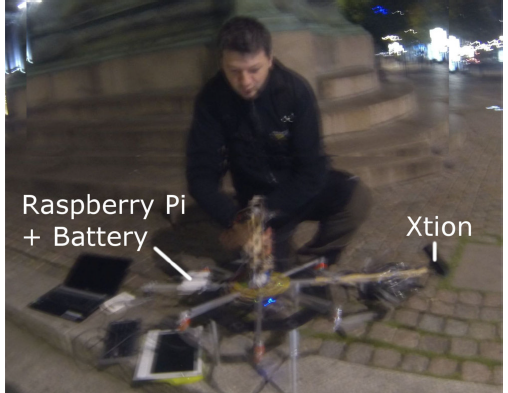

Figure 3: Components of the mid-air reconstruction system.

The octocopter frame was built from aluminum rods mounted in a star-shape. At the ends of the eight rods the brushless motors Turnigy 2216 were mounted. The flight controller was Mikrokopter [mks]. The Lithium Polymer 4s battery of 14.8V and 5A supported a 10 minute flight time. Two batteries were used. One rod was extended and the Xtion was mounted on it. For balance, on the opposite rod a 5V battery connected to the RPi from Fig. 3 were mounted.

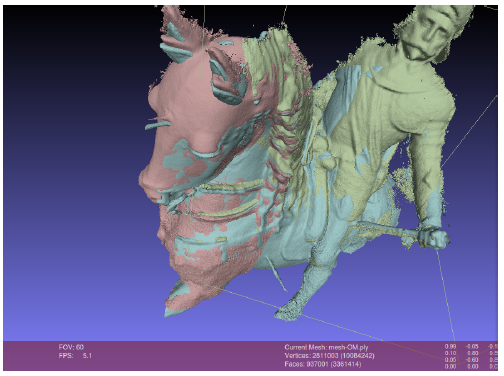

Figure 4: A screenshot of Meshlab during the manual mesh alignment.

The meshes obtained in the previous stage have been aligned and visualized in Meshlab (Fig. 4). This manual process took several hours and was mainly due to the loss in camera tracking and the limited reconstruction volume of Kinect Fusion.

Figure 5: Jetson board connected to the ASUS Xtion depth sensor on top of a battery.

In the second experiment, we tested the NVIDIA Jetson K1 board capabilities for real-time reconstruction. We did not mount this system on a multicopter yet since the reconstruction performance requires improvement. Here we present our current promising results on this platform.

The Jetson K1 is a parallel mobile platform which has been recently released by Nvidia for embedded applications such as computer vision, robotics, and automotive [Teg]. It delivers 326 GFlops at low cost ($192) and low power consumption (10w). The Jetson TK1 is powered by Tegra K1, which currently sets the performance record of embedded system chips. the hardware set-up is depicted in Fig. 5.

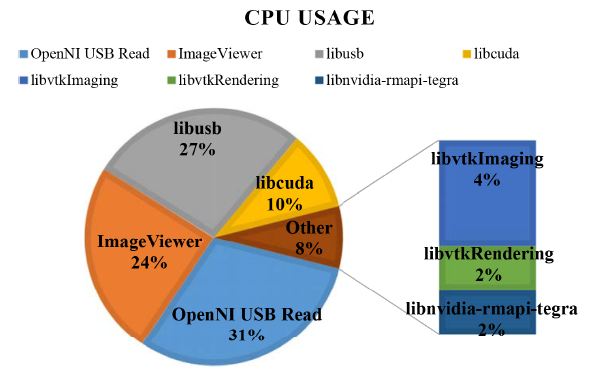

Figure 6: Experiment 2 library calls and their CPU usage.

This frame rate was considered not sufficient in order to try the reconstruction on the multicopter, so we started to analyze bottlenecks with NVIDIA System Profiler. Fig. 6 shows the library calls responsible for the highest CPU usage together with the relative percentage of CPU load within a single frame.

DISCUSSION AND FUTURE WORK

We presented two experimental setups to obtain the geometry of a statue that does not allow a direct scan from the ground. The first experiment employed the widely available Raspberry Pi platform. The reconstruction process and results showed the need for visualizing and processing the depth maps in real-time.

Concerning RQ1, and based on the insights from our first set-up, we developed the hardware set-up using the the Jetson K1 which enables 3D reconstruction in realtime on the multicopter. This allowed for the online analysis of the current state of the reconstructed mesh, enabling its correction and re-scanning if necessary. In this second experiment we show that nearly real-time reconstruction is achievable, however faster performance is desirable and more optimization is required. Also, this set-up posed new research questions, such as:

- what is a good path for moving theChalmers University of Technology, sensor?

- what interface and visualization would allow the user to have a complete understanding of what was scanned?

- how shall the path of scanning be modified so that the user is able to correct the scan?

Concerning RQ2, we found that real-time reconstruction and visualization is necessary in order to fully reconstruct and obtain a quality mesh of the real object. Controlling the octocopter flight path and checking the state of the reconstruction is a challenge, but might be solved through a screen that is permanently in the field of view of the user who controls the octocopter.

Source: Chalmers University of Technology

Authors: Alexandru Dancu | Marco Fratarcangeli | Mickaël Fourgeaud | Zlatko Franjcic | Daniel Chindea | Morten Fjeld