ABSTRACT

This study proposes a vision-based intelligent nighttime driver assistance and surveillance system (VIDASS system) implemented by a set of embedded software components and modules, and integrates these modules to accomplish a component-based system framework on an embedded heterogamous dual-core platform.

Therefore, this study develops and implements computer vision and sensing techniques of nighttime vehicle detection, collision warning determination, and traffic event recording. The proposed system processes the road-scene frames in front of the host car captured from CCD sensors mounted on the host vehicle.

These vision-based sensing and processing technologies are integrated and implemented on an ARM-DSP heterogamous dual-core embedded platform. Peripheral devices, including image grabbing devices, communication modules, and other in-vehicle control devices, are also integrated to form an in-vehicle-embedded vision-based nighttime driver assistance and surveillance system.

THE PROPOSED NIGHTTIME DRIVER ASSISTANCE AND EVENT RECORDING SYSTEM

Figure 2 . A sample nighttime road scene

The input image sequences are captured from the vision system. These sensed frames reflect nighttime road environments appearing in front of the host car. Figure 2 shows a sample nighttime road scene taken from the vision system. In this sample scene, two vehicles are on the road. The left vehicle is approaching in the opposite direction on the neighboring lane, and the right vehicle is moving in the same direction as the camera-assisted host car.

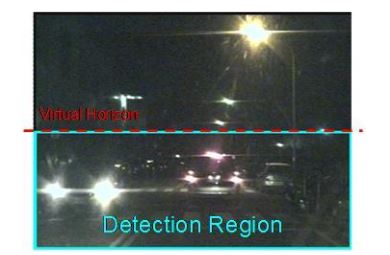

Figure 4 . The detection region and virtual horizon for bright object extraction in Figure 2

Accordingly, to screen out non-vehicle illu minant objects such as street lamps and traffic lights located above half of the vertical y-axis (i.e., the “horizon”), and save the computation cost, the bright object extraction process is only performed on the bright components located under the virtual horizon (Figure 4).

EMBEDDED SYSTEM IMPLEMENTATIONS

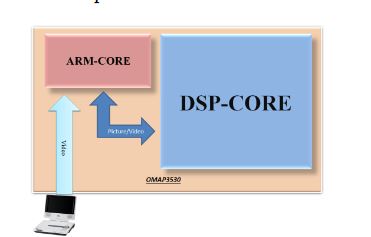

Figure 9 . The TI OMAP3530 Embedded Experimental Platform

In this study, the proposed VIDASS system was implemented on an ARM-DSP heterogamous dual-core embedded platform, the TI OMAP3530 platform (Figure 9). The TI OMAP3530 platform is an efficient solution for portable and handheld systems from the TI DaVinci™ Digital Video Processor family. This platform consists of one Cortex A8 ARM-based general-purpose processor with a 600 MHz operational speed and one TI C64x+ digital signal processor with a 430 MHz operational speed on a single chip.

Figure 10 . The software architecture of the VIDASS system on the ARM-DSP heterogamous dual-core embedded platform

The proposed VIDASS system uses the TI DVSDK software architecture to accomplish the real-time vision-based computing modules on the ARM-DSP heterogenous dual-core embedded platform, as Figure 10 illustrates. The study adopts a component-based framework to develop the embedded software framework of the proposed VIDASS system.

EXPERIMENTAL RESULTS

Figure 16 . The user interface of the proposed VIDASS system

Figure 16 shows the main system screen of the proposed VIDASS system, which consists of three buttons, including the functions of the system configuration, system starting, and system stopping. The system configuration sets system values such as the voice volume, control signaling, and traffic event video recording, whereas system starting and system stopping buttons start and stop the proposed system, respectively.

CONCLUSIONS

This study presents a vision-based intelligent nighttime driver assistance and surveillance system (VIDASS system). The framework of the proposed system consists of a set of embedded software components and modules, and these components and modules are integrated into a component-based system on an embedded heterogamous multi-core platform. This study also proposes computer vision techniques for recording road-scene frames in front of the host car using a mounted CCD camera, and implement these vision-based techniques as embedded software components and modules.

Vision-based technologies have been integrated and implemented on an ARM-DSP multi-core embedded platform and peripheral devices, including image grabbing devices and mobile communication modules. These and other in-vehicle control devices are also integrated to accomplish an in-vehicle embedded vision-based nighttime driver assistance and surveillance system.

Experimental results demonstrate that the proposed system is effective and offers advantages for integrated vehicle detection, collision warning, and traffic event recording to improve driver surveillance in various nighttime road-environments and traffic conditions. In the further studies, a feature-based vehicle tracking will be developed to more effectively detect and process the on-road vehicles having different number of vehicle lights, such as motorbikes.

Besides, the proposed driver assistance system can also be improved and extended by integrating some sophisticated machine learning techniques, such as Support Vector Machine (SVM) classifiers, on multiple cues including vehicle lights and bodies, to further enhance the detection feasibility under difficult weather conditions (such as rainy and dusky conditions), and strengthen the classification capability on more comprehensive vehicle types, such as sedans, buses, trucks, lorries, small and heavy motorbikes.

Source: National Taipei University

Authors: Yen-Lin Chen | Hsin-Han Chiang | Chuan-Yen Chiang | Chuan-Ming Liu | Shyan-Ming Yuan | Jenq-Haur Wang