ABSTRACT

For social robots to be successfully integrated and accepted within society, they need to be able to interpret human social cues that are displayed through natural modes of communication. In particular, a key challenge in the design of social robots is developing the robot’s ability to recognize a person’s affective states (emotions, moods, and attitudes) in order to respond appropriately during social human–robot interactions (HRIs).

In this paper, we present and discuss social HRI experiments we have conducted to investigate the development of an accessibility-aware social robot able to autonomously determine a person’s degree of accessibility (rapport, openness) toward the robot based on the person’s natural static body language.In particular, we present two one-on-one HRI experiments to:1) determine the performance of our automated system in being able to recognize and classify a person’s accessibility levels and 2) investigate how people interact with an accessibility-aware robot which determines its own behaviors based on a person’s speech and accessibility levels.

HUMAN BODY LANGUAGE RECOGNITION DURING HRI

Fig.1. Socially assistive robot Brian 2.1 and its Kinect sensor

In this paper, we incorporate a robust automated recognition and classification system, using the Kinect sensor, for determining the accessibility levels of a person during one-on-one social HRI. The system can identify the interactant from cluttered realistic environments using a statistical model, and geometric and depth features. Static body poses are then accurately obtained using a learning method. This system is integrated into our socially assistive robot Brian 2.1 (Fig.1) to allow the human-like robot to uniquely determine its own accessibility-aware behaviors during non contact one-on-one social interactions in order to provide task assistance to users.

AUTOMATED ACCESSIBILITY FROM BODY LANGUAGE CLASSIFICATION TECHNIQUE

(a) Head and lower arms. (b) Head and crossed arms. (c) Head and two arms touching. (d) Lower arm and arm touching head. (e) Both lower arms and head touching.

Based on the skin color identification results, a binary image is generated to isolate skin regions, i.e., Fig.3. In general, NISA body poses displayed by a person generate between 1 and 3 skin regions in each binary image.

PERFORMANCE COMPARISON STUDY

Fig.4. Example trunk orientations/leans and arm orientations using joint locations provided by the Kinect body pose estimation technique

(a) Trunks: A,arms:N. (b) Trunks:T with a sideways lean, arms:N. (c) Trunks:T,arms:T.

The Kinect SDK utilizes a random decision forest and local mode finding to generate joint locations of up to two people from depth images. The person closest to the robot is identified as the user. We have developed a technique to identify the static body pose orientations and leans in order to determine accessibility levels from the Kinect SDK joint locations during social HRI. To do this, the upper trunk is defined as the plane formed by connecting the points corresponding to the joints of the right and left shoulders and the spine (middle of the trunk along the back).

The lower trunk is identified as the plane formed by connecting the points of the left, right, and hip center joints. The lower and upper trunks are shown in Fig.4. The relative angle between the normal of each plane and the Kinect camera axis is then used to determine an individual’s lower and upper trunk orientations with respect to the robot. The position of the left and right shoulders relative to the left and right hips and the angle between the normals of the planes are used to determine the lean of the trunk.

(a) Accessibility level IV pose. (b) Accessibility level III pose. (c) Accessibility level II pose. (d) Accessibility level I pose.

Overall, the participants displayed 223 different static poses during the experiments. Fig.5 shows four example poses displayed during the aforementioned interaction experiments with Brian 2.1. Columns (i) and (ii) present the 2-D color and 3-D data of the segmented static poses. The body part segmentation results and the corresponding ellipsoid models obtained from the multimodal pose estimation are shown in columns (iii) and (iv). Last, the multi-joint models obtained from the Kinect SDK body pose estimation approach are presented in column (v).

The poses in Fig.5 consist of the following: 1) hands touching in front of the trunk with the upper trunk in a toward position and the lower trunk in a neutral position; 2) one arm touching the other arm which is touching the head while leaning to the side with the upper trunk in a neutral position and the lower trunk in a toward position; 3) arms crossed in front of the trunk with both trunks in neutral positions; and 4) arms at the sides with both trunks in away positions.

ACCESSIBILITY AWARE INTERACTION STUDY

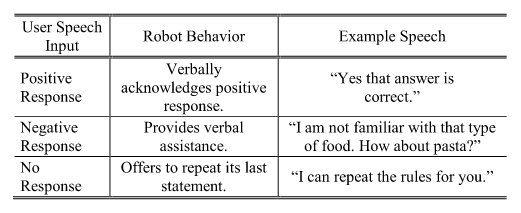

Table VI : Non Accessibility- Aware Robot Behaviors

Fig.6. Brian 2.1 providing verbal assistance while swaying its trunk during the non accessibility aware behavior type

To initiate each interaction, Brian 2.1 greets a user by saying hello to the user by name. To end the interaction, Brian informs the user that the tasks have been completed and says goodbye. The robot’s non accessibility-aware behaviors are summarized in Table VI. Fig.6 shows a visual example of this robot behavior type.

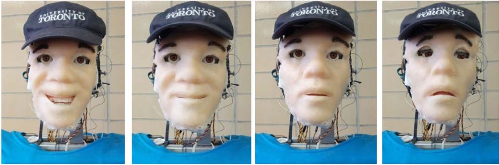

Fig.7. Brian 2.1’s facial expressions

(a) High positive valence. (b) Positive valence. (c) Neutral valence. (d) Negative valence.

An open mouth smile is used as it distinguishably conveys increased positive valence as compared to a closed mouth smile. The robot displays positive valence using a closed mouth smile and a happy tone of voice. Neutral valence is simply displayed utilizing a combination of a neutral facial expression and tone of voice. Negative valence is displayed by Brian 2.1 using a sad facial expression and tone of voice, where the latter has a slower speed and lower pitch than the robot’s neutral voice. Examples of Brian 2.1’s facial expressions are shown in Fig.7.

CONCLUSION

In this paper, we implemented the first automated static body language identification and categorization system for designing an accessibility-aware robot that can identify and adapt its own behaviors to the accessibility levels of a person during one-on-one social HRI. We presented two sets of social HRI experiments. The first consisted of a performance comparison study which showed that our multimodal static body pose estimation approach is more robust and accurate in identifying a person’s accessibility levels over a system which utilized the Kinect SDK joint locations.

The second set of HRI experiments investigated how individuals interact with an accessibility-aware social robot, which determines its own behaviors based on the accessibility levels of a user toward the robot. The results indicated that the participants were more accessible toward an accessibility-aware robot over a nonaccessibility aware robot, and perceived the former to be more socially intelligent. Overall, our results show the potential of integrating an accessibility identification and categorization system into a social robot, allowing the robot to interpret, classify, and respond to adaptor style body language during social interactions.

Our results motivate future work to extend our technique to scenarios which may include interactions with more than one person and when individuals are sitting. Furthermore, we will consider extending the current system to an affect-aware system which will consider the fusion of other modes of communication in addition to static body language, such as for example, head pose and facial expression as investigated in as well as dynamic gestures.

Source: Wineyard University

Authors: Derek McColl | Chuan Jiang | Goldie Nejat

>> Latest IoT based Robotics Projects for Engineering Students