ABSTRACT:

NAND Flash-based Solid State Disks have many attractive technical merits,such as low power consumption, light weight, shock resistance, sustainability of hotter operation regimes, and extraordinarily high performance for random read accesswhich makes SSDs immensely popular and be widely employed in different types of environments including portable devices, personal computers, large data centers, and distributed data systems.

However, current SSDs still suffer from several critical inherent limitations, such as the inability of in-place-update, asymmetric read and write performance, slow garbage collection processes, limited endurance, and degraded write performance with the adoption of MLC and TLC techniques. To alleviate these limitations, we propose optimizations from both specific outside applications layer and SSDs’ internal layer.

Since SSDs are good compromise between the performance and price, so SSDs are widely deployed as second layer caches sitting between DRAMs and hard disks to boost the system performance. Due to the special properties of SSDs such as the internal garbage collection processes and limited lifetime, traditional cache devices like DRAM and SRAM based optimizations might not work consistently for SSD-based cache.

Therefore, for the outside applications layer, our work focus on integrating the special properties of SSDs into the optimizations of SSD caches. First, we propose to leverage the out-of-place update property of SSDs to improve both the performance and lifetime of SSDs. Second, a new zero-migration garbage collection is proposed for SSD read cache to reduce the internal garbage collection activities and prolong the lifetime of SSDs without sacrificing the cache performance.

Moreover, when SS-Ds are deployed as write caches,we come up with a locality-driven dynamic cache allocation scheme to improve both the performance and lifetime of SSD cache by compromising the cache hit ratio and the internal garbage collection overhead. Finally, a workload-aware hybrid ECC design is proposed to alleviate the flash write performance degradation without hurting the read performance and data reliability.

SNIPPETS:

Proposed Approaches:

Our optimizations target both the outside application layers and internal components. For the outside application layers, we focus on the the cache where SSDs are deployed as caches. Previous work have shown the severe interferences between read and write, when read and write requests are mixed together and sent to the same SSD.

The interference could especially degrade the read performance of SSD cache due to much higher write and erase latencies. Moreover, mixed read and write data in the same Flash block can lead to higher garbage collection overhead. Besides, Xia et al. has shown that read and write requests could be well separated by analyzing the real word IO trace files.

Flash-aware High-performance and Endurable Cache:

An SSD is a good compromise among performance, capacity, and cost. DRAM is too costly and obviously not a persistent storage medium (it loses data when power outage occurs). Conventional HDDs are too slow.

Therefore, SSDs are widely used as caches sitting between DRAM and hard disk drives (HDDs) to fill the huge performance gap between DRAM and HDDs. Despite all these attractive merits, SSDs suffer from several inherent limitations, especially the limited erase cycles. Each flash block could only be erased limited cycles, after which the block will be unreliable and marked as bad block.

Flash-based SSDs have several distinct properties compared with hard disk drives.Two of the most important aspects are erase-before-write and out-of-place update. A page could only be updated after erasing a whole block which contains multiple pages. The erase operation takes about several milliseconds which will degrade the write performance of SSDs, and out of place update is adopted to alleviate the influence of slow erase operations.

Instead of updating the data in the original physical location, the new data is written to a new free location and the previous data is marked as invalid which will be reclaimed in the future.

Experimental Methodology:

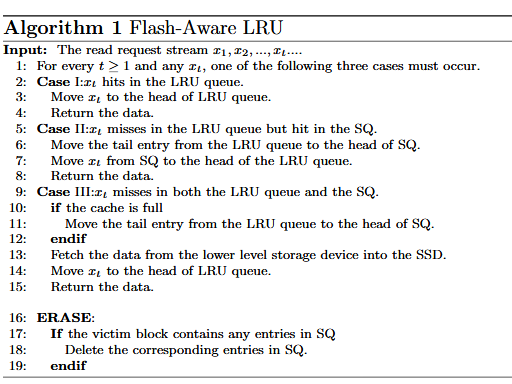

We modified the Disksim with SSD extension to evaluate our proposed flash aware cache schemes. lists the main parameters of our experiments. Since LRU and ARC are two of the most widely used cache replacement algorithms to evaluate a cache architecture, we choose the normal LRU and ARC algorithms as our baselines and implement both of them with the Disksim simulator.

Experimental Results:

Cache hit ratio, average response time, and erase count are three main metrics used in this paper to evaluate our proposed flash-aware and zero-migration garbage collection cache designs. The first subsection shows the results when only our flash aware design is applied.

Cache hit ratios of LRU, ARC, the cache capacities used here include: 3GB, 4GB, 5GB, and 6GB. Over-provisions Configurations are 15%, 25%, and 35%.

Zero-migration Garbage Collection Scheme for Flash Read Cache:

NAND Flash-based Solid State Disks (SSDs) have been deployed in a wide range of application scenarios including the portable devices, laptops, and high performance computing systems due to many attractive technical merits, such as low power consumption, light weight, shock resistance, sustain hot operation regimes, and extraordinarily high performance for random read access.

Experimental Methodology and Results:

To evaluate the efficiency of our proposed design we integrated our zero-migration garbage collection scheme with LRU and ARC which are two popular cache replacement algorithms. For the other cache replacement algorithms, they can be easily tailored and integrated with our design.

Locality-driven Dynamic Flash Cache Allocation:

Nowadays, both personal laptops and large data centers are equipped with large main memory to bridge the huge performance gap between the high-performance processors and slow storage systems. This large main memory can be effective for hiding the latency of read-intensive workloads, especially for workloads with good locality.

Experimental Methodology and Results:

To verify the efficiency of our proposed cache design, we modified the Disksim with SSD extension to implement our proposed design.lists the main parameters of our simulator. Flash page size is 4KB and each Flash block consists of 64 Flash pages.

Improving MLC Flash Performance with Workload-aware Differentiated ECC:

NAND Flash-based Solid State Disk (SSD) has been widely deployed in various environments because of its high performance, nonvolatile property, and low power consumption. To continuously increase the capacity and reduce the bit cost, manufactures are aggressively scaling down the geometries and storing more bits information per flash cell.

Experimental Methodology and Results:

We modified the Disksim with SSD extension to verify our proposed design. We assume only two write schemes are supported by the device for our workload aware differentiated ECC scheme, the normal-cost write mode and low-cost write mode that doubles the ∆Vp.

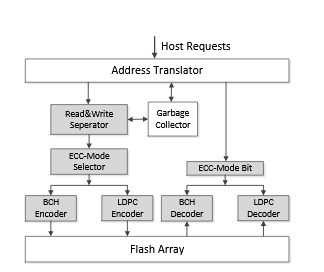

Architecture and Working Flow:

It gives the overview of our workload-aware differentiated ECC design.In addition to the typical components of SSDs like address translator and garbage collector, a read and write separator, ECC-model selector, ECC mode bit, and a hybrid ECC system that supports both BCH and LDPC are added in our design. A 1-bit ECC mode tag is attached to each page as metadata to help the system choose the corresponding decoder for a read request.

CONCLUSIONS:

In this dissertation, we make the following contributions to improve the performance and endurance of NAND-based SSDs:

1. We propose a novel flash-aware high-performance and endurable cache by lever aging the special out-of-place update property of SSDs. Due to the out-of-place updates, when SSDs are used as caches, the cache eviction only removes the metadata of the cache entry from the cache queue, however the real user data still exists inside SSDs until the whole flash block being erased.

Our flash-aware cache design tries to utilize the evicted but still available data to improvethe cache performance and prolong the lifetime of Flash-based cache. The ex-perimental results demonstrate that our flash-aware cache design improve the performance by up to 40% and prolong the endurance of SSD cache by up to more than 70%.

2. We propose a new zero-migration garbage collection scheme to reduce the over head and frequency of internal garbage collection processes for flash-based read cache. Typical garbage collection processes requires the valid data migration processes to move the valid pages to other free location before erasing the whole victim block,which can introduce extra write operations and hurt the endurance of SSDs. Our zero-migration tries to aggressively erase the whole victim block without performing the cost valid data igration processes based on the following observation.

when SSDs are used as read cache, all the data inside SSD cache will have exact backup in the hard disks or write buffering,therefore aggressively erase the victim block by skipping the data migration processes will never result in data loss. The experimental results show that our zero-migration garbage collection scheme could prolong the lifetime of SSD cache by up to 72% without sacrificing the cache performance.

3. We propose a locality-driven dynamic flash cache allocation scheme. When SSDs are used as write caches, the traditional cache hit ratio oriented cache optimizations could not obtain consistent performance benefit and may even hurt the lifetime of SSDs due to the internal garbage collection activities.

Therefore,our locality-driven dynamic flash cache allocation scheme tries to leverage the miss ratio curve to dynamically configure the SSD cache to achieve the optimal cache performance by compromising the cache hit ratio and internal garbage collection overhead. The experimental results indicate that our design could also achieve similar or even better performance when compared with the static optimal cache configurations.

4. We propose a workload-aware differentiated ECC scheme to improve the SSD write performance without sacrificing the read performance. The main idea is to dynamically classify the logical pages into three categories: write-only, read-only, and overlapped part.

For write-only logical pages, low-cost write with strong ECC scheme will be applied to increase the write performance. For write logical pages in the overlapped part, the low-cost writes with strong ECC will be selectively used based on their relative write and read hotness.

While for any read logical pages encoded with a stronger ECC, we will rewrite them with the normal-cost write and ECC scheme if their hotness exceed a pre-defined threshold. The evaluation results show that our workload-aware differentiated ECC scheme could reduce the write and read response times by 48% and 11% on average,respectively. Even compared with the latest previous work,our workload-aware design can still gain about 4% write performance and 11% read performance improvement.

FUTURE WORK:

Currently, for the cache optimizations, our work focus on the case where SSD-based read cache and write cache are separated due to severe interference between Flash read and Flash write. However, the architecture with separated read and write caches might introduce additional overhead to guarantee data consistency between the read cache and write cache.

Moreover, the separation of read cache and write cache might lead to inefficiency of the cache capacities if the workloads are read dominated or write dominated. Therefore, as part of our future work, we are going to integrate our proposed cache optimizations in this work with the unified cache architecture and come up with solutions to alleviate the internal Flash read and write interferences.

Source: Virginia Commonwealth University

Authors: Qianbin Xia