ABSTRACT

Blob detection is a common task in vision-based applications. Most existing algorithms are aimed at execution on general purpose computers; while very few can be adapted to the computing restrictions present in embedded platforms. This paper focuses on the design of an algorithm capable of real-time blob detection that minimizes system memory consumption.

The proposed algorithm detects objects in one image scan; it is based on a linked-list data structure tree used to label blobs depending on their shape and node information. An example application showing the results of a blob detection co-processor has been built on a low-powered field programmable gate array hardware as a step towards developing a smart video surveillance system.

The detection method is intended for general purpose application. As such, several test cases focused on character recognition are also examined. The results obtained present a fair trade-off between accuracy and memory requirements; and prove the validity of the proposed approach for real-time implementation on resource-constrained computing platforms.

RELATED CONCEPTS AND WORK

The object detection stage typically receives a binary image as input. A binary image denotes foreground pixels with a color value of 1, while the the background pixels are denoted with 0. This image is also referred to as the foreground mask and is produced by a background segmentation technique that can range from a simple thresholding operation (i.e., the use of a fixed rule to determine background and foreground pixels) to an elaborate statistical-based algorithm (i.e., the use of historic models to determine the class of a new pixel).

BLOB RECOGNITION PROCESS

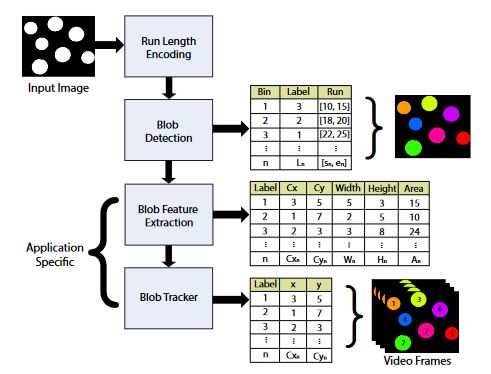

Figure 1. Blob detection and tracking. Full system overview

The tracker uses a minimum distance vector (MDV) obtained for different blob metrics, such as centroid and area. This information allows identifying each detected blob trough time regardless of its location on the scene, as long as their geometrical properties remain reasonably consistent. Figure 1 depicts the full overview of a blob detection and tracking system.

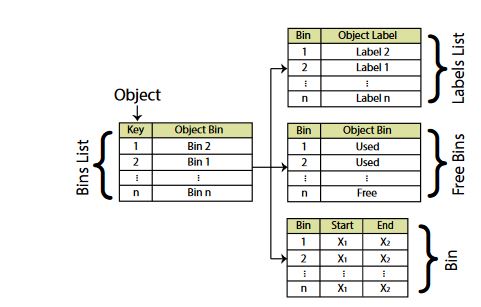

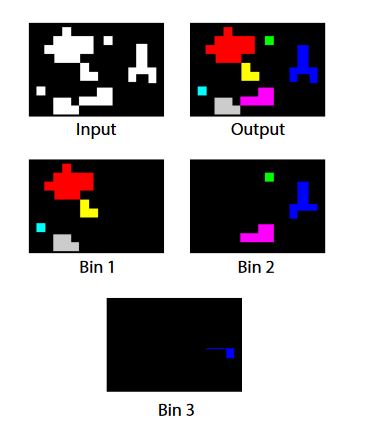

Figure 3. Relationships between linked-lists and bin data structure

A pair of rows is processed from left to right. The initial key assignment is, therefore, dependent on the object’s initial position in the image. The object key is used to link the object to a bin; the bin is then linked to a label. The list of current free and used bins is also linked to the tree structure. An extra data structure can be used to store the start and end points of a detected object. Figure 3 depicts the linked-lists used and their interdependence.

APPLICATION EXAMPLE

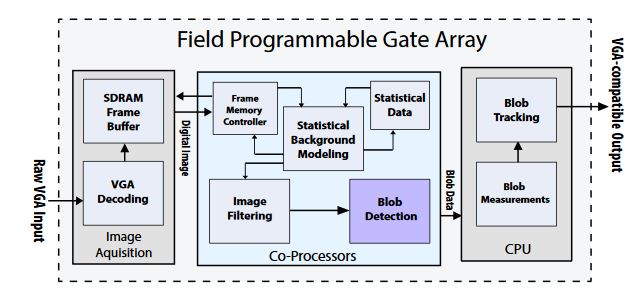

Figure 10. Application example: complete video surveillance embedded system

An additional memory controller requests pixels from SDRAM and feeds them to a background classifier component. The background classifier uses statistical data to filter out objects that do not belong to the original scene. The final binary mask is then processed by the blob detection co-processor. The component realizes the algorithm described in Section 3.6. Detection results can also be displayed on a VGA-compatible monitor. Figure 10 depicts a block diagram of the complete surveillance embedded system.

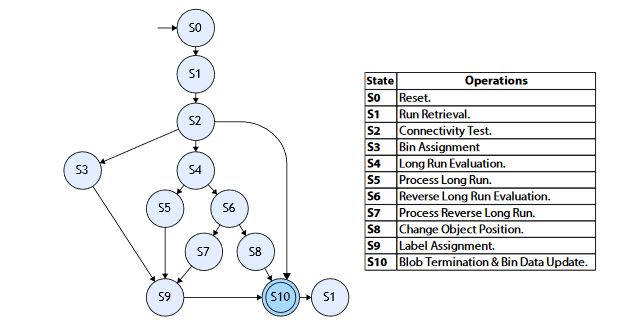

Figure 12. Simplified control FSM diagram for hardware implementation

Figure 12 depicts a simplified state diagram for the hardware implementation of the control FSM. The diagram closely follows Algorithm 1. The figure does not consider latency introduced by data transfers. In the S0 state, all signals are set to their initial values. In S1, a pair of new runs (pixel packets) are retrieved from the register bank. The connectivity test is performed on these runs in S2. Depending on the results, state flow can be directed to three different states.

RESULTS AND DISCUSSION

Figure 18. Test Image 1

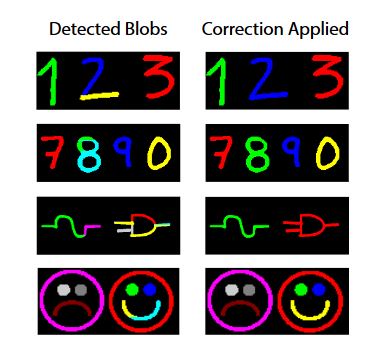

The blob detection algorithm is intended for general purpose recognition. As such, we evaluate its performance with various images that test general detection for use in other applications. On each test, the input image is scanned just once. The correction phase may be required for certain images after the first scan analysis is performed. If a common run in more than one bin is found, correction is applied by label merging. Figure 18 shows the first test image.

Figure 21. Complex test images after correction is applied

The corrected results are depicted in Figure 21. Finally, input images from the University of Southern California Signal and Image Processing Institute (USC-SIPI) database have also been tested. Most of these images show uniform blobs; however, big complex shapes are broken up into multiple bins; albeit, all sharing one single label.

CONCLUSIONS

Blob detection is a common task for computer vision applications. It is often performed on general purpose computing architectures as an algorithm that relies on image storage. For implementation on embedded systems, however, system memory and computing power are limited resources, and alternate techniques must be designed. In this paper, a blob detection algorithm was proposed and developed for implementation on embedded hardware, focused on a system-on-chip application. Emphasis has been made on low-memory consumption and fast processing.

Blob information is stored in discrete data structures called bins. Bin management is achieved using linked-lists structures. It is important to note that blobs are dynamic objects with properties that can change within frames. Liked-lists keep track of these changes efficiently. Moreover, linked-lists present a good trade-off between design flexibility and resource consumption. Depending on blob dynamics, several cases that hinder optimal detection can be encountered.

Complex shapes cannot be correctly identified on a single image scan and can lead to labeling errors. Such is the case of concave-up objects. To deal with this situation, an additional correction phase is also implemented. The correction phase only depends on the information contained in each data bin and does not require additional image scans or storage. Nonetheless, extra latency is added to the system. Once a blob is detected, a label and a bin are assigned; blob data are processed for feature extraction, and a motion tracking stage can be used for blob seeking through multiple video frames.

The system is used to track simple convex objects, composed of circular and squared contours. The correction phase has been seamlessly integrated in the detection module. A soft-core CPU is used to execute tracking software. The results obtained with this implementation show a fast blob detection of simple, but restricted shapes. The current version of the object sensing system is configured to detect a maximum number of ten blobs presented simultaneously (i.e., in one single row of the input image).

Source: University of Southern California

Authors: Ricardo Acevedo-Avila | Miguel Gonzalez-Mendoza | Andres Garcia-Garcia