ABSTRACT

Embedded systems control and monitor a great deal of our reality. While some “classic” features are intrinsically necessary, such as low power consumption, rugged operating ranges, fast response and low cost, these systems have evolved in the last few years to emphasize connectivity functions, thus contributing to the Internet of Things paradigm.

A myriad of sensing/computing devices are being attached to everyday objects, each able to send and receive data and to act as a unique node in the Internet. Apart from the obvious necessity to process at least some data at the edge (to increase security and reduce power consumption and latency), a major breakthrough will arguably come when such devices are endowed with some level of autonomous “intelligence”.

Intelligent computing aims to solve problems for which no efficient exact algorithm can exist or for which we cannot conceive an exact algorithm. Central to such intelligence is Computer Vision (CV), i.e., extracting meaning from images and video. While not everything needs CV, visual information is the richest source of information about the real world: people, places and things.

The possibilities of embedded CV are endless if we consider new applications and technologies, such as deep learning, drones, home robotics, intelligent surveillance, intelligent toys, wearable cameras, etc. This paper describes the Eyes of Things (EoT) platform, a versatile computer vision platform tackling those challenges and opportunities.

STATE OF THE ART

Historically, work in wireless and distributed camera networks lies conceptually close to the outlined Internet of Things scenario that envisages tiny connected cameras everywhere. Vision sensor networks aim at small power-efficient vision sensor nodes that can operate as standalone. This is a natural evolution of the significant research effort made in the last few years on Wireless Sensor Networks (WSN).

EOT HARDWARE

Figure 1. Development of Ears of Things (EoT) boards. Sizes, from left to right (in mm): 200 × 180, 100 × 100, 57 × 46

The EoT hardware has been developed in steps. Figure 1 shows the three iterations of the board. The first prototype developed (on the left) was based on a Movidius Myriad 2 development board. The MV0182 development board includes a Myriad 2 SoC plus other components, such as EEPROM, HDMI transmitter, Ethernet, SD card, IMU, IR and pressure sensors, etc.

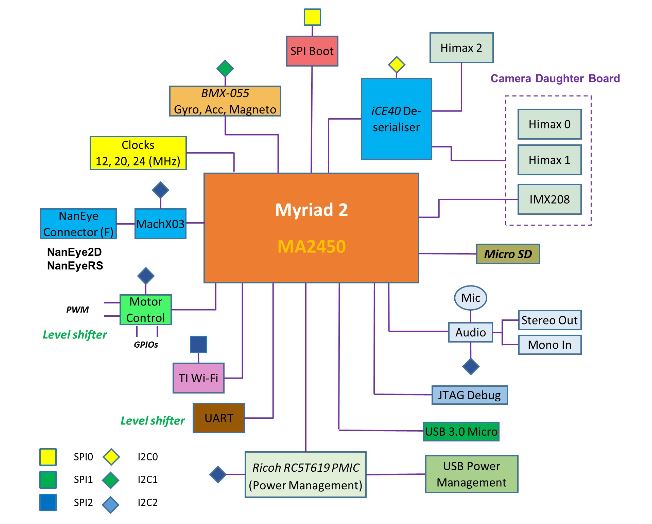

Figure 4. EoT block diagram

A block diagram of the complete system is shown in Figure 4. All processing and control is performed by the low-power Myriad 2 MA2450 SoC by Movidius (an Intel company, Santa Clara, CA, USA). This SoC has been designed from the ground up considering efficient mobile high-performance computation. Myriad 2 is a heterogeneous, multicore always-on SoC supporting computational imaging and visual awareness for mobile, wearable and embedded applications.

EOT SOFTWARE

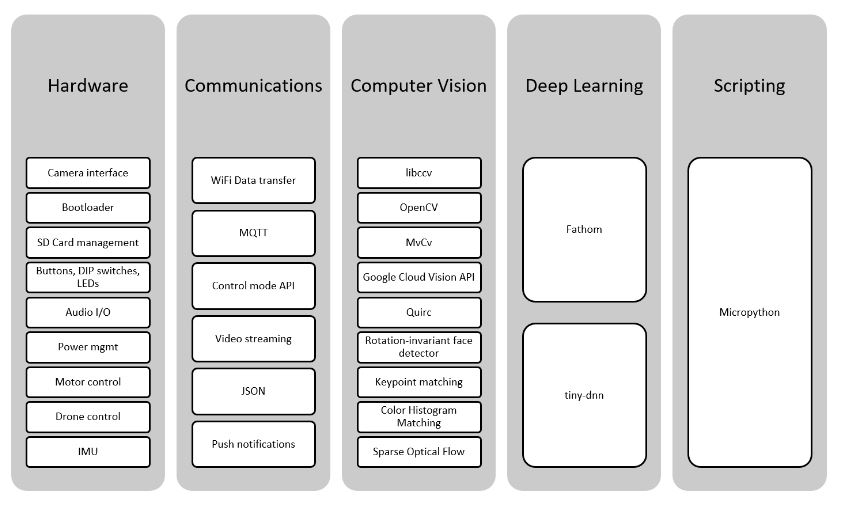

Figure 9. Main EoT software modules

The software has been developed on top of RTEMS and the Movidius Development Kit (MDK). Figure 9 shows an overview of the main software modules. A number of modules allow interaction with the hardware components. WiFi-based communication functionality allows interaction with the outside world (thus providing the ‘of Things’ qualification). Computer vision and deep learning (more precisely, convolutional neural networks) provide the tools to create intelligent applications. Finally, scripting provides an easy way to develop prototypes.

Figure 12. Battery-operated EoT streaming video to an Android smartphone

Streaming allows integration with existing services, such as video surveillance clients and cloud-based video analytics. Streaming may be also useful to configure or monitor the vision application from an external device like a tablet or PC. For all of these reasons, EoT also implements the Real-Time Streaming Protocol (RTSP) typically used for video streaming in IP cameras; see Figure 12.

RESULTS

Figure 19. Peephole demonstrator

Peephole door viewer, Figure 19: Before leaving the home, the user will attach the EoT device to the peephole. The device will continuously monitor for the presence and/or suspicious activity at the door (or simply someone knocking at the door), sending alarms and pictures via Internet (assuming home WiFi is available). Tampering detection (i.e., an attempt to cover the peephole) will be also implemented and will also generate an alarm.

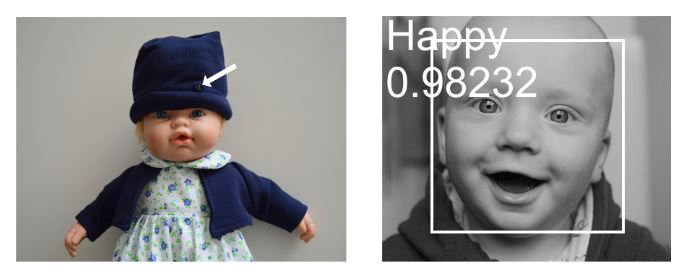

Figure 24. Smart doll with emotion recognition. Left: The camera was in the head, connected through a flex cable to the board and battery inside the body. Right: emotion recognition

This demonstrator (see Figure 24) leverages the rotation-invariant face detector, as well as the CNN inference engine. Invariance to rotation is needed in this case since the child may be holding the doll. The face detector crops and resizes the face, which is then fed to the CNN classifier.

CONCLUSIONS

In the context of the Internet of Things and the growing importance of cognitive applications, this paper has described a novel sensor platform that leverages the phenomenal advances currently happening in computer vision. The building elements have been all optimized for efficiency, size and flexibility resulting in the final EoT form factor board plus a stack of useful software. Apart from hardware and architectural elements, software and protocols used have been also optimized.

The platform software and hardware specs are open and available at the project website. There are options for board evaluation and for support. Both hardware and software repositories include wikis with documentation and examples. The information and support provided allow creating a custom design if needed. EoT is an example of an IoT sensor exemplifying a number of tenets:

• As sensor capabilities improve, intelligence must be brought to the edge

• Edge processing alone is not sufficient; a concerted effort with cloud elements will still be needed in many applications

• In order to reach the potential audience and usage expected in IoT, complex (if powerful) embedded systems require flexibility, added-value and close interaction with other widely-used products such as smartphones/tablets

• Low-power operation requires not only low-power hardware, but also careful software design and protocol selection.

The results so far suggest that the platform can be leveraged by both industry and academia to develop innovative vision-based products and services in shorter times. The demonstrators described above are just an example of the platform’s capabilities, and the platform is expected to be particularly useful for mobile robots, drones, surveillance, headsets and toys. In future work, we expect that more sample applications will be developed by both consortium and external partners, particularly combining many EoTs in distributed applications.

Source: University of Castilla-La Mancha

Authors: Oscar Deniz | Noelia Vallez | Jose L. Espinosa-Aranda | Jose M. Rico-Saavedra | Javier Parra-Patino | Gloria Bueno | David Moloney | Alireza Dehghani | Aubrey Dunne | Alain Pagani | Stephan Krauss | Ruben Reiser | Martin Waeny | Matteo Sorci | Tim Llewellynn | Christian Fedorczak | Thierry Larmoire | Marco Herbst | Andre Seirafi | Kasra Seirafi

>> IoT Software Projects for Engineering Students

>> More Wireless Sensor Networks Projects Abstract for Engineering Students

>> More Wireless Engineering Projects for Beginners

>> More Wireless Embedded Projects for Engineering Students